Building for a voice user interface is a new space for many developers. I recently worked with Cathy Pearl, Head of Conversation Design Outreach here at Google, to build an Action for the community.

The following are key takeaways from our collaboration:

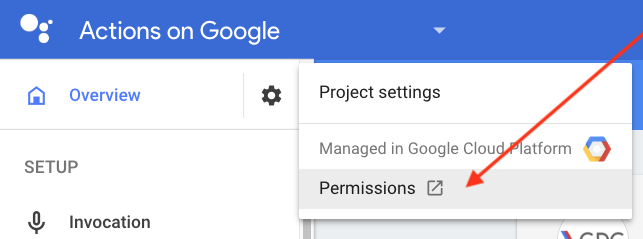

- Providing Access to Collaborators (Designer)

At first, I submitted my Action for Alpha release and added Cathy as a tester. For her to gain access to the Action, I had to email her the Action’s link from the Actions directory (the Actions directory lists out all Actions that are available on Google Assistant). With that link, she accepted being a tester, which meant that she had access to the Action and could test out the Action on different devices. We then realized that she needed additional tools when it came to the TTS (Text to Speech) adjustments. So, I added her as an editor to the project.

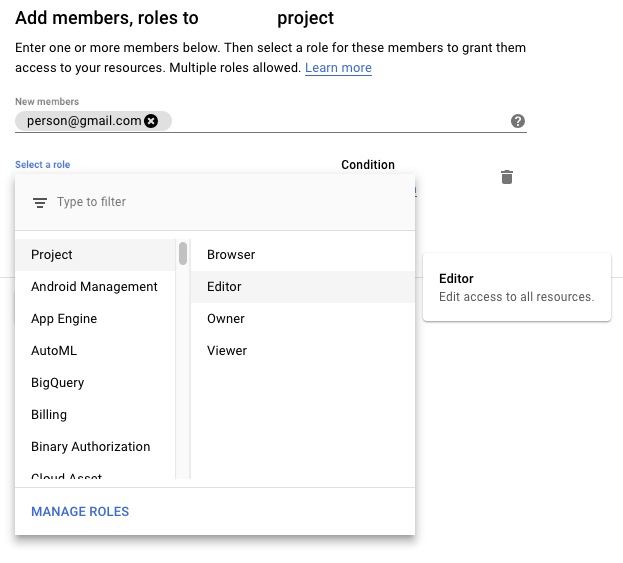

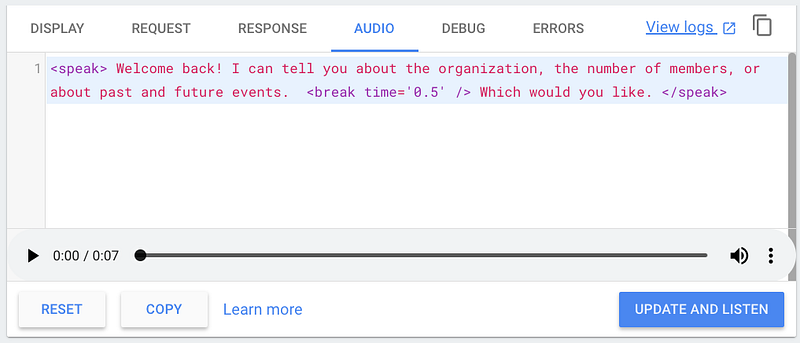

2. Fine Tuning your TTS (text to speech)

As a conversation designer, Cathy needed to hear and modify the responses to make it a better experience. In the Actions Console, there is a simulator menu option where you can test out your Action. On the right side, there is a tab for the audio of what the Action says. There, you can modify the grammar and modified the break to create a more natural output. I also showed Cathy the SSML reference so she could have further control over the output.

We ended up making small edits to the Welcome response text so the speech was more natural:

TEXT: “Welcome back! I can tell you about the organization, how many members we have, or about events. What would you like to know?”

SPEECH: “<speak> Welcome back! I can tell you about the organization, how many members we have, or about events. <break time=’0.5′ /> What would you like to know. </speak>”

Below you can hear the difference between the original (without SSML) and the updated one (with SSML):

Notes from Cathy

It’s important to listen to your prompts, and not just read them, because the TTS doesn’t always sound the way you think it will. By listening to the prompts out loud, I was able to see that we needed a brief pause between the intro message and the final question. In addition, the question sounded best with a period at the end instead of a question mark. When listening to prompts, try closing your eyes, and experiment with pauses, punctuation, and wording.

3. More Informative No Match Statements

This particular Action is not intended for daily use; instead, it’s meant to be used about once a month. Because of this infrequency, Cathy suggested giving a little more information on the first fallback prompt instead of the rapid reprompt that is suggested in the documentation. She also modified the second prompt to include a statement about how to exit the Action and added the word “still”.

Notes from Cathy

It’s similar to someone acknowledging they know you already told them something, but you forgot. e.g. “Sorry, what day did you say you’re leaving?” which is preferred to “When are you leaving?” which gives no indication they already told you

It’s important that the fallback prompts be context-sensitive and not generic. Below is an example of what we ended up using:

FALLBACKPROMPT_1: “Sorry, I didn’t quite get that. I can tell you about the organization, or when our next event is.”

FALLBACKPROMPT_2: “Sorry, I still didn’t get that. I can tell you about the organization or when our next event is, or if you’re finished just say ‘goodbye’.”

FALLBACKPROMPT_FINAL: “I’m sorry I’m having trouble. Let’s stop here for now. Goodbye.”

4. Smarter Suggestion Chips

I had suggestion chips in my project, where they were randomly selected from an array. I did this because there were only 6 options. Cathy suggested modifying them so they only show options that the user has not yet picked. I ranked my suggestion chips and added an array to the conversation object (learn more about saving data). I also added to each intent to update the array whenever an intent was triggered. Because of this, the suggestion chips only surfaced the remaining options.

5. Overriding Assistant Conversation Exits

Cathy noticed that when she would try to exit the Action using terms like “exit” or “goodbye”, the Action just closed the conversation without saying one of the farewell responses. After some research, I realized that there are a few reserved words that will take over the Action and automatically close the conversation. To override this functionality, all I had to do was add the actions_intent_CANCEL event to my end intent.

Notes from Cathy

In these types of Actions, in which there is not necessarily a clear end point, users often feel more comfortable with explicitly exiting. Therefore, it’s important to allow them to say “goodbye” or “I’m all finished” and give a graceful response before exiting.

The biggest thing to take from this is that working alone isn’t ideal — not for you as the developer or for the project you’re building. For the majority of Action developers, we don’t have access to a conversational designer for every project or at all. But, hopefully, you have access to other people. Ask them for their thoughts and feedback. What’s even better is to ask someone who isn’t a developer at all. They’ll think of things that are part of natural conversation. If you ever get stuck on how to implement any suggestions, ask it in our community groups: g+ & stack overflow.

If you’re interested in learning more about designing Actions, check out Cathy’s latest post: Sample Dialogs: The Key to Creating Great Actions on Google. And, of course, check out our Conversational Design guidelines and developer documentation. Did you already build a great Action? I want to hear what tips you learned. Share your tips with us on Twitter using #AoGDevs!

Want More? Head over to the Actions on Google community to discuss Actions with other developers. Join the Actions on Google developer community program and you could earn a $200 monthly Google Cloud credit and an Assistant t-shirt when you publish your first app.